I once wrote a blog post about simplest possible snapshot-style backups using hard-links and rsync.

Things are much simpler with ZFS. Just copy all to remote Unix box with ZFS volume and make regular ZFS snapshots.

For example, the following command runs each hour on my server:

zfs snapshot -r tank/dropbox@$(date +%s_%Y-%m-%d-%H:%M:%S_%Z_hourly)

Each day:

zfs snapshot -r tank/dropbox@$(date +%s_%Y-%m-%d-%H:%M:%S_%Z_daily)

... etc. The most important date option here is %s -- Unix timestamp.

Now the problem. Soon you'll amass a huge amount of snapshots:

# zfs list -t snapshot tank/dropbox ... tank/dropbox@1648562401_2022-03-29-16:00:01_CEST_hourly 208K - 25.6G - tank/dropbox@1648566001_2022-03-29-17:00:01_CEST_hourly 224K - 25.6G - tank/dropbox@1648569601_2022-03-29-18:00:01_CEST_hourly 200K - 25.6G - tank/dropbox@1648573201_2022-03-29-19:00:01_CEST_hourly 232K - 25.6G - tank/dropbox@1648609201_2022-03-30-05:00:01_CEST_hourly 1.69M - 25.6G - tank/dropbox@1648612801_2022-03-30-06:00:01_CEST_hourly 880K - 22.0G - ...

How would you trim your snapshots? One popular method is Grandfather-Father-Son: 1, 2. This requires 3 snapshots. But what if you're rich enough to keep ~100 snapshots? Keeping just last 100 hours isn't interesting. You want also one snapshot per month. One per year (for ~3-5 years, for example).

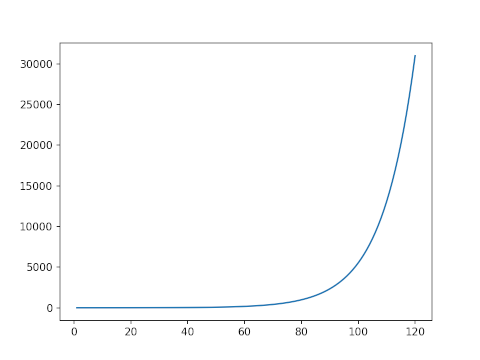

After some experiments, I created such a scale:

# These parameters are to be tuned if you want different logarithmic 'curve'... x=np.linspace(1,120,120) y=1.09**x plt.plot(x,y)

# Points (in hours). Round them and deduplicate:

tbl=set(np.floor(y).tolist()); tbl

{1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0,

9.0, 10.0, 11.0, 12.0, 13.0, 14.0, 15.0, 17.0,

18.0, 20.0, 22.0, 24.0, 26.0, 28.0, 31.0, 34.0,

37.0, 40.0, 44.0, 48.0, 52.0, 57.0, 62.0, 68.0,

74.0, 81.0, 88.0, 96.0, 104.0, 114.0, 124.0, 135.0,

148.0, 161.0, 176.0, 191.0, 209.0, 227.0, 248.0, 270.0,

295.0, 321.0, 350.0, 382.0, 416.0, 454.0, 495.0, 539.0,

588.0, 641.0, 698.0, 761.0, 830.0, 905.0, 986.0, 1075.0,

1172.0, 1277.0, 1392.0, 1517.0, 1654.0, 1803.0, 1965.0, 2142.0,

2335.0, 2545.0, 2774.0, 3024.0, 3296.0, 3593.0, 3916.0, 4269.0,

4653.0, 5072.0, 5529.0, 6026.0, 6569.0, 7160.0, 7804.0, 8507.0,

9272.0, 10107.0, 11016.0, 12008.0, 13089.0, 14267.0, 15551.0, 16950.0,

18476.0, 20139.0, 21951.0, 23927.0, 26081.0, 28428.0, 30987.0}

Roughly speaking, we will keep snapshot that is roughly 1 hour old. 2 hours old. 3 hours old... 37 hours old... 74 hours old... etc, as in table.

Using Jupyter for Python again, I'm getting a list of all dates:

now=math.floor(time.time()) SECONDS_IN_HOUR=60*60 # Generate date/time points. Only one snapshot to be kept between each adjacent 'points'. points=sorted(list(map(lambda x: datetime.datetime.fromtimestamp(now-x*SECONDS_IN_HOUR), tbl))); points [datetime.datetime(2018, 9, 18, 9, 56, 10), datetime.datetime(2019, 1, 2, 23, 56, 10), datetime.datetime(2019, 4, 10, 19, 56, 10), datetime.datetime(2019, 7, 9, 13, 56, 10), datetime.datetime(2019, 9, 29, 21, 56, 10), datetime.datetime(2019, 12, 14, 8, 56, 10), datetime.datetime(2020, 2, 21, 15, 56, 10), datetime.datetime(2020, 4, 25, 6, 56, 10), datetime.datetime(2020, 6, 22, 13, 56, 10), datetime.datetime(2020, 8, 15, 1, 56, 10), datetime.datetime(2020, 10, 3, 3, 56, 10), datetime.datetime(2020, 11, 17, 3, 56, 10), datetime.datetime(2020, 12, 28, 11, 56, 10), datetime.datetime(2021, 2, 4, 8, 56, 10), datetime.datetime(2021, 3, 11, 3, 56, 10), datetime.datetime(2021, 4, 12, 1, 56, 10), datetime.datetime(2021, 5, 11, 8, 56, 10), datetime.datetime(2021, 6, 7, 4, 56, 10), datetime.datetime(2021, 7, 1, 19, 56, 10), ... datetime.datetime(2022, 3, 31, 10, 56, 10), datetime.datetime(2022, 3, 31, 12, 56, 10), datetime.datetime(2022, 3, 31, 14, 56, 10), datetime.datetime(2022, 3, 31, 16, 56, 10), datetime.datetime(2022, 3, 31, 18, 56, 10), datetime.datetime(2022, 3, 31, 19, 56, 10), datetime.datetime(2022, 3, 31, 21, 56, 10), datetime.datetime(2022, 3, 31, 22, 56, 10), datetime.datetime(2022, 3, 31, 23, 56, 10), datetime.datetime(2022, 4, 1, 0, 56, 10), datetime.datetime(2022, 4, 1, 1, 56, 10), datetime.datetime(2022, 4, 1, 2, 56, 10), datetime.datetime(2022, 4, 1, 3, 56, 10), datetime.datetime(2022, 4, 1, 4, 56, 10), datetime.datetime(2022, 4, 1, 5, 56, 10), datetime.datetime(2022, 4, 1, 6, 56, 10), datetime.datetime(2022, 4, 1, 7, 56, 10), datetime.datetime(2022, 4, 1, 8, 56, 10), datetime.datetime(2022, 4, 1, 9, 56, 10), datetime.datetime(2022, 4, 1, 10, 56, 10), datetime.datetime(2022, 4, 1, 11, 56, 10)]

For the most recent times we will keep more snapshots. For more distant times -- just a few.

Let's see statistics for years, months, days. How many snapshots to be kept for each year/month/day?

# Snapshots kept, per each year:

Counter(map(lambda x: x.year, points))

Counter({2018: 1, 2019: 5, 2020: 7, 2021: 18, 2022: 72})

# Snapshots kept, per each month:

Counter(map(lambda x: x.year*100 + x.month, points))

Counter({201809: 1,

201901: 1,

201904: 1,

201907: 1,

201909: 1,

201912: 1,

202002: 1,

202004: 1,

202006: 1,

...

202107: 2,

202108: 1,

202109: 2,

202110: 2,

202111: 3,

202112: 3,

202201: 5,

202202: 8,

202203: 47,

202204: 12})

# Snapshots kept, per each day:

Counter(map(lambda x: x.year*10000 + x.month*100 +x.day, points))

Counter({20180918: 1,

20190102: 1,

20190410: 1,

20190709: 1,

20190929: 1,

20191214: 1,

20200221: 1,

20200425: 1,

20200622: 1,

...

20220319: 1,

20220320: 1,

20220321: 1,

20220322: 1,

20220323: 2,

20220324: 1,

20220325: 2,

20220326: 2,

20220327: 2,

20220328: 3,

20220329: 4,

20220330: 6,

20220331: 12,

20220401: 12})

See full Jupyter notebook: HTML, viewable right here, Notebook file.

You can find more about logarithms in my book.

In short, we keep one (random or just first) snapshot between two adjacent timestamps. All the rest snapshots are just deleted.

This Python script do this. It requires remote host name (server you use for backups, may be user@localhost) and dataset name. Run it with dry run option (0) for the first time -- just in case.

#!/usr/bin/env python3

import subprocess, sys

import math, time, datetime

if len(sys.argv)!=4:

print ("Usage: dry_run host dataset")

print ("dry_run: 0 or 1. 1 is for dry run. 0 - commit changes.")

print ("host: for example: user@host")

print ("dataset: for example: tank/dropbox")

exit(1)

dry_run=int(sys.argv[1])

if dry_run not in [0,1]:

print ("dry_run option must be 0 or 1")

exit(0)

host=sys.argv[2]

dataset=sys.argv[3]

def get_snapshots_list():

rt={}

with subprocess.Popen(["ssh", host, "zfs list -t snapshot "+dataset],stdout=subprocess.PIPE, bufsize=1,universal_newlines=True) as process:

for line in process.stdout:

if "NAME" in line:

continue

line=line.rstrip().split(' ')[0]

line2=line.split('_')[0].split('@')[1]

rt[int(line2)]=line

return rt

snapshots=get_snapshots_list()

# These parameters are to be tuned if you want different logarithmic 'curve'...

points=sorted(list(set([math.floor(1.09**x) for x in range(1,120+1)])))

# points in hours

#print (points)

now=math.floor(time.time())

# points in UNIX timestamps

SECONDS_IN_HOUR=60*60

points_TS=sorted(list(map(lambda x: now-x*SECONDS_IN_HOUR, points)), reverse=True)

points_TS.append(0) # remove the oldest snapshot, if it's not in range

prev=now

# we are going to keep only one snapshots between each range

# a snapshot to be picked randomly, or just the first/last

# if there is only one snapshot in the range, leave it

for p in points_TS:

print ("range", prev, p, datetime.datetime.fromtimestamp(prev), datetime.datetime.fromtimestamp(p))

range_hi=prev

range_lo=p

print ("snapshots between:")

snapshots_between={}

for s in snapshots:

# half-closed interval:

if s>range_lo and s<=range_hi:

print (s, snapshots[s])

snapshots_between[s]=snapshots[s]

print ("snapshots_between total:", len(snapshots_between))

if len(snapshots_between)>1:

snapshots_between_vals=list(snapshots_between.values())

# going to kill all snapshots except the first

print ("keeping this snapshot:", snapshots_between_vals[0])

for to_kill in snapshots_between_vals[1:]:

print ("removing this snapshot:", to_kill)

if dry_run==0:

process=subprocess.Popen(["ssh", host, "zfs destroy "+to_kill])

process.wait()

prev=p

For example, in my case, after run, these backups are left:

root@centrolit ~ # zfs list -t snapshot tank/dropbox NAME USED AVAIL REFER MOUNTPOINT tank/dropbox@1641088802_2022-01-02-03:00:02_CET_hourly 1.23G - 2.05G - tank/dropbox@1641726001_2022-01-09-12:00:01_CET_hourly 2.08G - 8.38G - tank/dropbox@1642348801_2022-01-16-17:00:01_CET_hourly 52.7G - 67.3G - tank/dropbox@1642874401_2022-01-22-19:00:01_CET_hourly 4.29G - 22.3G - tank/dropbox@1643792401_2022-02-02-10:00:01_CET_hourly 16.3G - 33.2G - tank/dropbox@1644213601_2022-02-07-07:00:01_CET_hourly 794M - 12.2G - tank/dropbox@1644602401_2022-02-11-19:00:01_CET_hourly 57.2M - 10.8G - tank/dropbox@1645279201_2022-02-19-15:00:01_CET_hourly 4.77G - 47.9G - tank/dropbox@1645833601_2022-02-26-01:00:01_CET_hourly 8.47G - 59.6G - tank/dropbox@1646308802_2022-03-03-13:00:02_CET_hourly 455M - 39.8G - tank/dropbox@1646686801_2022-03-07-22:00:01_CET_hourly 1.29G - 39.0G - tank/dropbox@1647018001_2022-03-11-18:00:01_CET_hourly 13.7M - 37.2G - tank/dropbox@1647165601_2022-03-13-11:00:01_CET_hourly 1.01G - 231G - tank/dropbox@1647302401_2022-03-15-01:00:01_CET_hourly 2.54M - 233G - tank/dropbox@1647439201_2022-03-16-15:00:01_CET_hourly 25.5M - 76.9G - tank/dropbox@1647648001_2022-03-19-01:00:01_CET_hourly 607M - 17.6G - tank/dropbox@1647828001_2022-03-21-03:00:01_CET_hourly 4.34M - 21.7G - tank/dropbox@1647907201_2022-03-22-01:00:01_CET_hourly 4.37M - 22.0G - tank/dropbox@1647982801_2022-03-22-22:00:01_CET_hourly 14.8G - 35.4G - tank/dropbox@1648069201_2022-03-23-22:00:01_CET_hourly 5.18M - 22.0G - tank/dropbox@1648170001_2022-03-25-02:00:01_CET_hourly 5.38M - 28.7G - tank/dropbox@1648267201_2022-03-26-05:00:01_CET_hourly 2.46M - 28.9G - tank/dropbox@1648314001_2022-03-26-18:00:01_CET_hourly 2.80M - 28.8G - tank/dropbox@1648353601_2022-03-27-06:00:01_CEST_hourly 2.14M - 28.6G - tank/dropbox@1648429201_2022-03-28-03:00:01_CEST_hourly 1.12M - 27.9G - tank/dropbox@1648515601_2022-03-29-03:00:01_CEST_hourly 984K - 27.8G - tank/dropbox@1648555201_2022-03-29-14:00:01_CEST_hourly 1.06M - 25.6G - tank/dropbox@1648609201_2022-03-30-05:00:01_CEST_hourly 2.07M - 25.6G - tank/dropbox@1648630801_2022-03-30-11:00:01_CEST_hourly 944K - 22.0G - tank/dropbox@1648695601_2022-03-31-05:00:01_CEST_hourly 552K - 22.6G - tank/dropbox@1648713601_2022-03-31-10:00:01_CEST_hourly 392K - 22.8G -

This Python script taking into account only UNIX timestamp (after '@' character). The date/time after -- is just for user's convenience.

You can experiment in Jupyter with these parameters to change number of snapshots and steepness of the curve...

# These parameters are to be tuned if you want different logarithmic 'curve'... x=np.linspace(1,120,120) y=1.09**x

UPD: at reddit.

Yes, I know about these lousy Disqus ads. Please use adblocker. I would consider to subscribe to 'pro' version of Disqus if the signal/noise ratio in comments would be good enough.